The previous assumes that you want to use gcc 4.7 and Python 2.7 modify the install and set invocations if you want other versions. $ export MOE_CMAKE_OPTS=-DCMAKE_FIND_ROOT_PATH=/opt/local & export MOE_CC_PATH=/opt/local/bin/gcc & export MOE_CXX_PATH=/opt/local/bin/g++

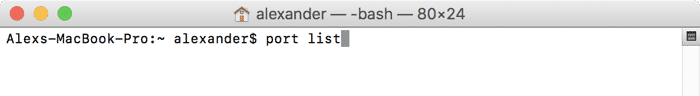

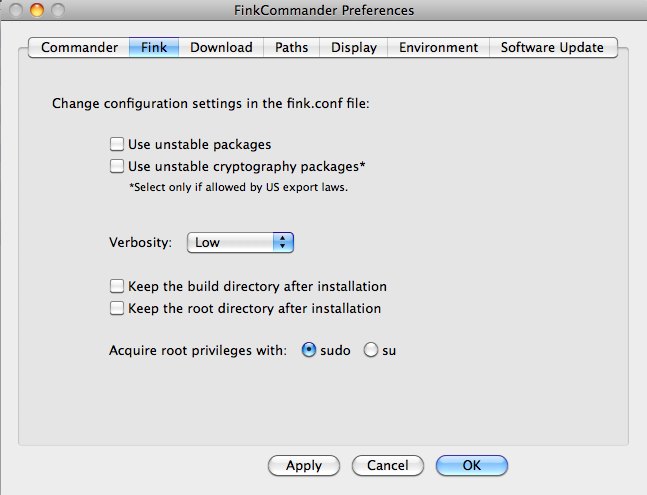

$ sudo port install boost # <- DO NOT run this in OS X 10.9! In addition to this list, double check that all items on Install from source are also installed. If you are using another package manager (like homebrew) you may need to modify opt/local below to point to your Cellar directory.įor the following commands, order matters items further down the list may depend on previous installs. Make sure you create your virtualenv with the correct python -python=/opt/local/bin/python if you are using MacPorts. So start a new shell or run export PATH=/opt/local/bin:/opt/local/sbin:$PATH. bashrc), but that command will not run immediately after MacPorts installation. It sets this in your shell’s rcfile (e.g. MacPorts requires that your PATH variable include /opt/local/bin:/opt/local/sbin. MacPorts is one of many OS X package managers we will use it to install MOE’s core requirements. Read General MacPorts Tips if you are not familiar with MacPorts.

#Macports list installed update#

(If you change the install directory from /opt/local, don’t forget to update the cmake invocation.) You must install it from source see warnings below.Īre you sure you wouldn’t rather be running linux?ĭownload MacPorts. OS X 10.9 users beware: do not install boost with MacPorts. gpp_heuristic_expected_improvement_optimization.gpp_mock_optimization_objective_functions.

0 kommentar(er)

0 kommentar(er)